Integrating PowerBI with RDF from WikiData

Posted

Last year I posted about using Azure Data Factory (ADF) to ingest WikiData into the database. As my knowledge of Power Query (PQM) has continued to grow I realized it might be possible to accomplish the same results using PowerQuery rather than ADF.

On the face of it, this isn’t quite as useful since once data is in PowerBI it’s not really easy to get back out. While that doesn’t seem likely to change, Power Query is gradually gaining new execution environments: the support in ADF is something of a toy currently, but it’s easy to see it developing to something more powerful over the next few years. Microsoft, if you’re listening: a full PQM service that scales from single node to spark based and targets any destination accessible from ADF would be marvellous.

So, with that in mind and a request from a colleague for some help, here’s how to create a country dimension in Power BI using WikiData:

Getting data out of WikiData

In the ADF flow we used the bulk export of WikiData. That’s not really practical for this use case. That leaves three other options:

- Export by subject. Given an entity, for example, given https://www.wikidata.org/wiki/Q2321706 one can use the

Special:EntityDataform to get the underlying RDF, using the Accept header to control the format. In this case, https://www.wikidata.org/wiki/Special:EntityData/Q2321706 This doesn’t always seem totally performant, presumably because WikiData does some conversions on demand. - Export using Linked Data Fragments. If you can express what you want in terms of absolute matches on subject, predicate and object (or any combination of the same) the LDF endpoint is fast and easy to use and very REST friendly.

- Finally, we can use SPARQL. The WikiData Query Service is super friendly as well as RESTy but non trivial queries can end up timing out.

Just like ADF, Power Query doesn’t natively understand RDF, but it happily slurps up CSV, so the trick, as last year, is to represent everything in ntriples and massage from there.

Wrapping in PQM

Given these sources, here’s some sample PQM functions that expose them.

GetTriplesForSubject takes an entity URI and calls the Special:EntityData page to download all triples for that subject:

let

GetTriplesForSubject = (subject as text) as nullable table =>

let

EntityID = Text.End(subject,Text.Length(subject)-Text.PositionOf(subject,"/",Occurrence.Last)),

Options = [Headers = [#"Accept"="application/n-triples"]],

WikiURI = Text.Combine({"https://www.wikidata.org/wiki/Special:EntityData",EntityID}),

// Convert to CSV, parsing for ' ' as the separator

data = Csv.Document(Web.Contents(WikiURI,Options),{"Subject", "Predicate", "Object", "Terminator"}," "),

noTerminator = Table.RemoveColumns(data,"Terminator"),

cleaned = Table.TransformColumns(noTerminator,

{{"Subject", each CleanNTriple(_), type text},

{"Predicate", each CleanNTriple(_), type text},

{"Object", each CleanNTriple(_), type text}})

in

cleaned

in

GetTriplesForSubjectThe function relies on a helper, CleanNTriple, that we’ll cover further down.

GetLabel takes a subject URI and gets the English label for it using the SPARQL service. In theory, one could extract this out of the full set of triples for the subject but this function is way faster. The SPARQL service doesn’t return ntriples but will happily generate CSV.

let

GetLabel = (subject as text) as nullable table =>

let

// Request Ntriples from the LDF Service

Options = [Headers = [#"Accept"="text/csv"]],

FixedSubject = if Text.At(subject,0) <> "<" then Text.Combine({"<",subject,">"}) else subject,

Query = Text.Combine({"SELECT ?label WHERE {

SERVICE wikibase:label {

bd:serviceParam wikibase:language ""en"" .

",FixedSubject," <http://www.w3.org/2000/01/rdf-schema#label> ?label

}

}"}),

url = Text.Combine({"https://query.wikidata.org/sparql?",Uri.BuildQueryString([query=Query])}),

data = Csv.Document(Web.Contents(url,Options)),

promoted = Table.PromoteHeaders(data, [PromoteAllScalars=true])

in

promoted

in

GetLabel This function can easily be generalized to make an arbitrary SPARQL call.

The final function is GetAllTriplesMatching. This invokes the LDF service, handling pagination as necessary to get all fragments matching the pattern. The pagination technique is lifted largely from Microsoft’s TripPin tutorial.

let

GetAllTriplesMatching = (optional sub as text,optional pred as text, optional obj as text) as table =>

GeneratePage((previous) =>

let

QueryParams = [],

QPWithSubject = if sub <> null then Record.AddField(QueryParams,"subject",sub) else QueryParams,

QPWithObject = if pred <> null then Record.AddField(QPWithSubject,"predicate",pred) else QPWithSubject,

AllQueryParams = if obj <> null then Record.AddField(QPWithObject,"object",obj) else QPWithObject,

// if previous is null, then this is our first page of data

url = Text.Combine({"https://query.wikidata.org/bigdata/ldf?",Uri.BuildQueryString(AllQueryParams)}),

nextLink = if (previous = null) then url else Value.Metadata(previous)[NextLink]?,

// if NextLink was set to null by the previous call, we know we have no more data

page = if (nextLink <> null) then GetPage(nextLink) else null

in

page

)

in

GetAllTriplesMatchingThe following support functions are used

GetNextLink

Takes the n-triple result from the LDF service and looks for the row with predicate http://www.w3.org/ns/hydra/core#nextPage, returning the object that is the URL of the next page of triples.

let

GetNextLink = (response) as nullable text =>

let

Matching = Table.SelectRows(response,each [Predicate] = "<http://www.w3.org/ns/hydra/core#nextPage>"),

Link = if Table.IsEmpty(Matching) then

null

else

Record.FieldOrDefault(Table.SingleRow(Matching), "Object"),

LinkCleaned = if Link <> null then

Text.Middle(Link,1,Text.Length(Link)-2)

else null

in

LinkCleaned

in

GetNextLinkGetPage

Given a URL to the LDF service retrieves the results in n-triples and converts them to a table, returning the next link as metadata.

let

GetPage = (url as text) as nullable table =>

let

// Request Ntriples from the LDF Service

Options = [Headers = [#"Accept"="application/n-triples"]],

// Convert to CSV, parsing for ' ' as the separator

data = Csv.Document(Web.Contents(url,Options),{"Subject", "Predicate", "Object", "Terminator"}," "),

noTerminator = Table.RemoveColumns(data,"Terminator"),

cleaned = Table.TransformColumns(noTerminator, {{"Subject", each CleanNTriple(_), type text},

{"Predicate", each CleanNTriple(_), type text},

{"Object", each CleanNTriple(_), type text}}),

// this cleaning breaks the hydra next page links so look back

nextLink = GetNextLink(noTerminator)

in

cleaned meta [NextLink = nextLink]

in

GetPageGenerateByPage

Repeatedly calls the LDF service through pagination to retrieve all triples matching the pattern.

let

GenerateByPage = (getNextPage as function) as table =>

let

listOfPages = List.Generate(

() => getNextPage(null),

(lastPage) => lastPage <> null,

(lastPage) => getNextPage(lastPage)

),

tableOfPages = Table.FromList(listOfPages,Splitter.SplitByNothing(),{"Column1"}),

firstRow = tableOfPages{0}?

in

if (firstRow = null) then

Table.FromRows({})

else

Value.ReplaceType(

Table.ExpandTableColumn(tableOfPages, "Column1", Table.ColumnNames(firstRow[Column1])),

Value.Type(firstRow[Column1])

)

in

GenerateByPageCleanNTriple

Removes the < and > characters from an individual value if present.

let

CleanNTriple = (triple) as nullable text =>

let

tripleCleaned = if Text.At(triple,0) = "<" and Text.At(triple,Text.Length(triple)-1) = ">" then

Text.Middle(triple,1,Text.Length(triple)-2)

else

triple,

cleanedString = ParseNTripleEscape(tripleCleaned)

in

cleanedString

in

CleanNTripleParseNTripleEscape

Unescapes Java style character encodings by pushing through the JSON parser. Would be nice if there was a more efficient way of doing this but I haven’t found one.

let

ParseNTripleEscape = (escape) as nullable text =>

let

resolved = Record.Field(Json.Document(Text.Combine({"{""e"": """,escape,"""}"})),"e")

in

resolved

in

ParseNTripleEscapePutting it all together

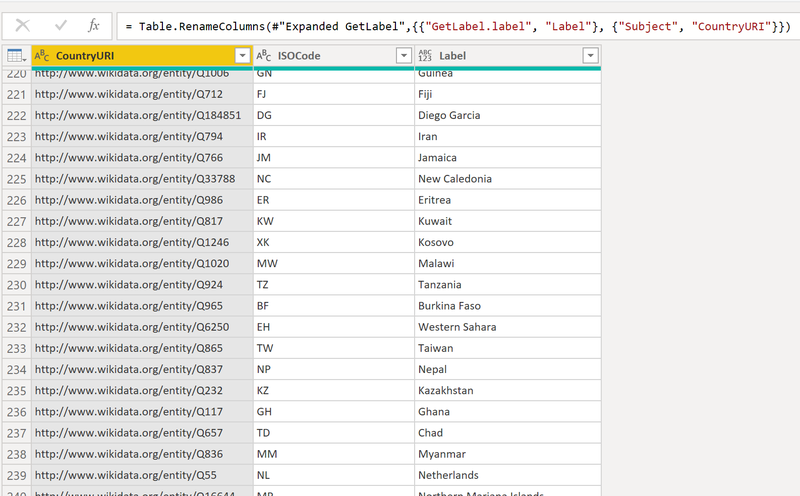

Given these functions, this script creates a country dimension from WikiData with ISO two digit code and English language label for each country.

let

pred = "http://www.wikidata.org/prop/direct/P297",

data = GetAllTriplesMatching(null,pred,null),

onlyMatches = Table.SelectRows(data,each [Predicate] = pred ),

dropPredicate = Table.RemoveColumns(onlyMatches,{"Predicate"}),

renamed = Table.RenameColumns(dropPredicate,{{"Object", "ISOCode"}}),

Labled = Table.AddColumn(renamed, "GetLabel", each GetLabel([Subject])),

expanded = Table.ExpandTableColumn(Labled, "GetLabel", {"label"}, {"GetLabel.label"}),

renamedLabel = Table.RenameColumns(expanded,{{"GetLabel.label", "Label"}, {"Subject", "CountryURI"}})

in

renamedLabelOne huge advantage over the ADF variant: it’s much faster - maybe a minute for this to run.

In theory these functions could be packaged as a Power BI Custom Connector. That’s not high on my list of to-dos but if you’re interested, tweet me @nonodename and, with enough enthusiasm I’ll figure it out!